Chapter 7: Logic & Reasoning

7.3 Logic

What is Logic?

Does the word “logic” thrill you? Do you enjoy doing logic puzzles? If so, you’re probably in the minority. Do you like pointing out the logical flaws in popular movies? (e.g., in A Quiet Place, why doesn’t the family move to the waterfall so the monsters can’t hear them?) Your friends might not enjoy watching movies with you, and might suggest that you shut off your logical brain and just enjoy the story. But making logical sense is an important skill for anyone, and even if logic puzzles give you headaches, having a grasp of logic is an advantage in life. The good news: while the study of logic can get abstract and baffling, logic’s basic principle is simple: Logic is the process of combining assertions to reach a conclusion.

Earlier, I mentioned syllogisms, the classical form of deductive logic. If you start with the assertion that “All dogs are cute,” and combine it with the observation that “Scooter is a dog,” you’re led to the logical conclusion that “Scooter is cute.” True? Judge for yourself:

Perhaps the assertion “All dogs are cute” needs a qualifier, but the logic of the syllogism does hold up. On the other hand, “All dogs are cute; Wall-E is cute; therefore Wall-E is a dog” is not logically valid (there are other cute things besides dogs).

If you want to learn about the rules of logic, Brittanica’s Laws of Thought page will give you plenty to chew on. For the rest of you, here are six different forms that logic can take:

- Deductive logic: starting with a generalization, and applying it to a specific instance.

- Inductive logic: using specific instances to form a generalization or find a pattern.

- Analogies: Thing A is like Thing B, and we can carry over lessons from A to B (e.g.,, “Life is like a box of chocolates; you never know what you’re going to get”). One form of analogy is a precedent argument: if something happened in a previous time and place, it will probably happen again (“In Germany, the Autobahn highway has no speed limits in most areas, and it has worked out fine, so we can lift the speed limit on American highways, too.”).

- Hypothetical reasoning: reasoning based on the phrases “if only” and “even if,” which tend to lead in opposite directions. “If only” arguments generally point to a need for change, as in “If only we made the possession of assault rifles illegal, the deaths from mass shootings would go down — therefore we need to ban those guns.” “Even if” arguments suggest that change would be ineffective, as in “Even if we make assault rifles illegal, people will still get their hands on them, so it won’t make any difference.”

- Criteria matching: this kind of reasoning is especially useful in definitional arguments and evaluation arguments (arguing whether a thing is good or bad, right or wrong). Criteria are required features, standards, or qualities, often expressed as “must haves.” If you’re in a definitional argument about whether bowling is a sport, for example, you might come up with criteria for defining a sport: involves athletic skill, is competitive, involves risk of injury, has organized leagues, and requires special shoes. Someone making the evaluation argument that the final season of Game of Thrones was terrible might call it repetitive, contradictory, and sexist — criteria for a bad television show.

- Sign reasoning: reaching a conclusion about the state of things based on observable signs, as in “You can tell someone is lying if their hands get fidgety” [See the “Spotting Liars” section of Chapter 12].

When someone calls an argument “logical,” they are essentially saying “I accept the truth of the assertions in the argument, and the way those assertions are put together seems to work.” In contrast, calling something “illogical” is another way of saying that some of the assertions in the argument are not true, or there is something wrong with the way they are combined. Figuring out exactly what is wrong can be challenging, but at least there is a shortcut to spotting flawed arguments: knowing the logical fallacies.

Logical Fallacies

It’s valuable to know about fallacies because they are not just flawed arguments; they are flawed arguments that sound logical to some people. In fact, many people have built lucrative careers off of fallacies, but there are two reasons you shouldn’t be one of those people:

- It’s unethical (see Chapter 3)

- People who know about fallacies can call you out on them

This section is a “spoiler”: by reading it, you’ll learn arguments that you can’t ethically use anymore. Well, except for one thing: that implies that fallacies are categorically easy to identify — either an argument is a fallacy or it isn’t. If you study them closely, however, you’ll discover that they don’t work that way, and there is no clear dividing line between a valid argument and a fallacious one. That doesn’t mean you can’t distinguish between good logic and fallacies (that would be an example of the “Fallacy of the Beard”, #12 below); it just means that you have to deal with some ambiguity. Think about the discussion of fences vs. cores in Chapter 3: the core approach says that these fallacies are further away from the core than good logical arguments, even if you can’t pinpoint the exact location of the fence.

Students of logical fallacies may notice an interesting linguistic feature: many of these fallacies go by Latin names. This is because the Ancient Romans were fascinated by them, and made long lists of them. The more recent tendency is to use English names, but I’ll include the Latin names in case you like showing off, or run across someone else using one. Here are a dozen common fallacies, with some variants:

- Bandwagon Fallacy (“Ad Populum”): if it’s popular, then it must be good. When ads brag that a movie is “the #1 film in America,” they imply that it’s a good movie, but they’re blurring popularity with quality or morality. The Futility Fallacy, a variation of this, says that there’s no point in having a law against something if people keep doing it anyway (such as pirating movies). It’s a variation of the Bandwagon Fallacy because it’s essentially saying “If it’s popular, then it should be legal.”

- Either-Or Fallacy (also known as “False Dichotomy”): when you sort the world into two categories, and argue that everyone who isn’t in Category A must be in Category B. Example: “Are you with us or against us? If you’re not part of the solution, you’re part of the problem.” Logical flaw: it ignores the middle ground, or other options besides A and B.

- Ad Hominem (attack the person): if you can’t refute the argument, attack the credibility of the person making it. Sometimes it’s legitimate to question someone’s credibility, but this becomes a fallacy when it’s intended to divert attention away from the topic or shut down debate. This is an example of a Genetic Fallacy, which says that an idea should be evaluated based on where it comes from or who thought of it (its genesis), rather than evaluated on its own terms. There’s even a positive variant on this: if a brilliant person thought of it, it must be a brilliant idea. (Sorry to say, even brilliant people have dumb ideas, and vice versa).

- Part for the Whole (also known as the Fallacy of Composition): assuming that what is true for one part of an entity is true for the whole thing. “Portland, Oregon is a liberal city, therefore the whole state of Oregon is liberal.”

- Whole for the Part (also known as the Fallacy of Division): assuming that what is true for a greater entity is true for every part of that entity. “Oklahoma is a conservative state, therefore Alison, who lives in Oklahoma, is conservative.”

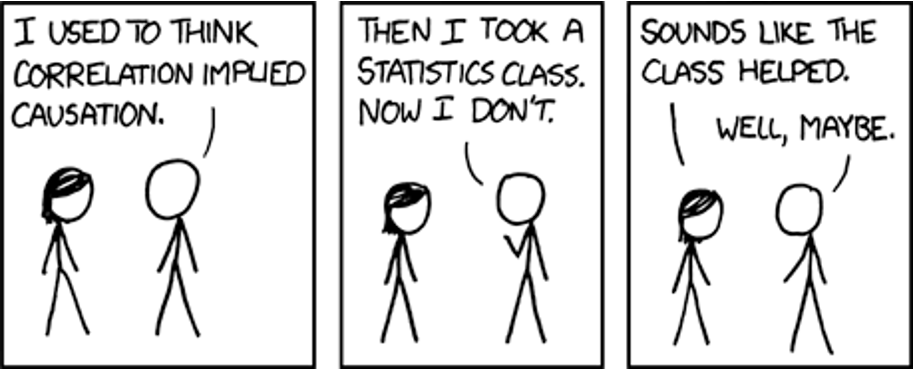

- Faulty Causality (still sometimes known by the Latin phrase “Post Hoc Ergo Propter Hoc” — sometimes shortened to “Post Hoc” — which means “After this, therefore because of this”): Assuming that because Event B came after Event A, A must have caused B to happen. You blew on a pair of dice right before you won at the craps table, so blowing on the dice is what made you win. Superstitions are obvious examples, but even careful scientific studies can fall prey to assuming that an effect was caused by something that happened before it. This is why scientists are reluctant to use the word “caused.”

- Appeal to Ignorance (“Ad ignorantium”): This may sound like the arguer is counting on the audience being poorly informed, but instead it’s an abuse of the principle of burden of proof. You may have heard about burden of proof in the context of criminal trials (where the prosecution has to prove that the defendant is guilty beyond a reasonable doubt), but it applies to non-legal contexts as well: if your roommate accuses you of leaving dirty dishes in the sink and you say “Name one time I did that,” you are putting the burden on them to prove it instead of you proving it never happens.

The Appeal to Ignorance fallacy is about situations where the person shifts the burden onto another person, but takes it a step further: if they don’t prove that you are wrong, you count that as proof that you are right. As the previous fallacy suggests, proving anything is difficult, so “absence of proof” is not the same as “proof of absence.” It also overlaps with the Either/Or Fallacy, since it ignores the middle ground possibility that nothing is proven either way. If your friend asserts that birds aren’t real and tries to get you to prove that they are, don’t fall for it: if you can’t figure out how to prove something as simple as the existence of birds, they might declare themselves the winner of the debate. Instead, I suggest you respond with, “Wait a minute, you’re the one with the loopy idea — it’s your job to prove it’s true.” But if you’re trying to avoid the Appeal to Ignorance fallacy, don’t pounce on them if they fumble the answer; just take it as a sign of how hard it is to prove anything. - Slippery Slope: assuming that if you take one policy step in a certain direction, it will inevitably lead to disaster. Initiatives to legalize gay marriage, for example, have long been met with predictions that it will eventually mean people will be able to marry their dog. While it’s true that actions do have consequences and that the American legal system is based on part on precedent (if you made legal ruling X before, it means you must make ruling Y now), the word “slippery” implies that there’s nothing that can be done to stop the catastrophic outcome once that first step is taken. This is one particular fallacy where the dividing line between legitimate argument and logical fallacy is so blurry that the radio show Freakonomics devoted an entire 49-minute episode to trying to untangle it.

- Straw Man: deliberately oversimplifying or mischaracterizing your opponent’s position so you can make it look foolish or extreme, like knocking down a person made out of straw. There’s a simple test for Straw Man: if your opponent is listening and they object to how you represented their view (“Hey wait a minute, you’re putting words in my mouth!”), you’ve committed the Straw Man fallacy; if they say, “Actually yes, that is my position,” you haven’t. What if the opponent doesn’t get a chance to respond? One way to prevent the problem is to find quotes of things they actually said or wrote (not taken out of context) instead of making sweeping generalizations on their behalf. But even if you quote directly, you can still commit a variant, known as the Weak Man fallacy: look through all the arguments your opposition makes, find the weakest among those arguments, then make it sound like it’s their only argument. For a discussion of Weak Man, see this Atlantic article.

- Fallacy of Obversion: If that word looks unfamiliar (don’t confuse it with “observation”), it’s because you’re not a coin collector, a member of the only group of people who use it regularly. When you think “obverse,” think “the other side of the coin.” The thing about coins, of course, is that you can’t see both sides at the same time: there’s a side you can see (the known), and a side you can’t (the unknown). The fallacy of obversion is assuming you know what the unknown option looks like based on the option you can see. If you go to a sporting event in a large stadium with many entrances, you might pick the south entrance, but if there’s a long line and you say “We picked the wrong line! The north entrance would have been faster,” you’re making that inference (unless you can actually see that north entrance). Watch for this in political ads against incumbents: “The current governor has done a bad job; vote for Mary Hum, the better choice.” Mary Hum is the unknown side of the coin.

- False Equivalence (also known as “Moral Equivalence”): if you can refer to two things by the same name, or put them both in the same category, then there is no moral distinction between the two. “My foot was too close to your car when you backed up, and you ran over my foot. That makes you just as bad as a driver who runs over a pedestrian and kills them.”

- The Fallacy of the Beard (also known as the Paradox of the Heap): This one is best illustrated with photographs of George Clooney:

Does George Clooney have a beard? In photo 1, you might say an unequivocal no; in photo 4, you might say a definite yes. But what about photos 2 and 3, or an infinite number of pictures that could have been taken between the two? When is the exact moment his stubble became a beard? Another way to argue it is: yes he does have a beard in photo 1, just a really, really short one, so logically speaking, there’s no difference between pictures 1 and 4. The “heap” version of the fallacy goes: is one grain of rice a “heap” of rice? How about two grains? Three? What about 57 or 93 or 248? Or go the other way: take a million grains of rice and start taking them away: If you can’t pinpoint the exact number when it becomes a heap or stops being one, there is either no such thing as a “heap” or a single grain is a heap. This is the argument you might make when you are stopped for speeding and you say, “Aw c’mon, I was only doing 38 mph — what’s the difference between 35 and 38?” If there’s no real difference between 35 and 38, there’s no difference between 38 and 41, or 41 and 44, all the way up to 200 mph. The flaw is that it doesn’t recognize the importance of arbitrary cutoffs in life.

If a dozen fallacies isn’t too much for you, I’ll add on two more oddly-named fallacies that have received a lot of attention in recent years:[1] Whaboutism and Sealioning.

- Whataboutism: This could be considered a form of Red Herring (where you deliberately shift the topic away from an argument you don’t want to deal with), mixed with a little Ad Hominem. The trick is simple: if someone wants to talk about something you feel protective about and you don’t have a good way to defend it, shift to a different topic that the other person feels defensive about. In the 1999 film Office Space, Joanna (Jennifer Aniston) confronts her boyfriend Peter (Ron Livingston) about his plan to skim profits from his employer, which she calls “wrong.” Peter turns it back on her, implying that she too is selling out her soul for an evil corporation. There wouldn’t be anything wrong with wanting to talk about her employer, except that his goal is to deflect the topic away from himself.

VIDEO: To hear John Oliver discuss whataboutism and the connection to Moral Equivalence (Fallacy #11), watch this:

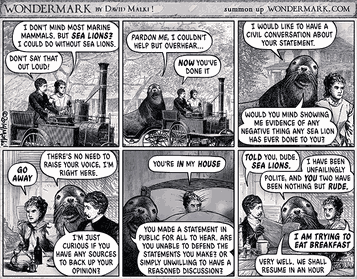

- The name Sealioning might be difficult to decipher unless you knew about this cartoon:

This refers to a technique that is all too easy to do in social media: keep asking questions, in the name of exploring a topic, until your respondent is exhausted and tells you to buzz off. This makes the “sea lion” look reasonable and the other people look closed-minded and uninterested in looking at the evidence — until you realize that that stated goal was always a pretext. You may see a connection to the Appeal to Ignorance Fallacy (#7). Bailey Poland calls it an “incessant, bad-faith invitation to engage in debate.”[2]

VIDEO: If you want to see a video rundown of some of these fallacies, and dozens more, check out “31 logical fallacies in 8 minutes” by Jill Bearup:

- If you read Box 6.2 in the previous chapter, you might recognize what I just did as “Sweetening the Deal.” ↵

- Poland, B. (2016). Haters: Harassment, Abuse and Violence Online. University of Nebraska Press - as cited in Sullivan, E., Sondag, M., Rutter, I. Meulemans, W., Cunningham, S. Speckmann, B. & Alfano, M. (2019). Can real social epistemic networks deliver the wisdom of crowds? In Oxford Studies in Experimental Philosophy (Vol 3). Eds. Lombrozo, T., Knobe, J. & Nichols, S. Oxford University Press. ↵